I love A/B testing!

It’s hard for me to imagine my life without it, and once you try it, you’ll never go back.

Why? Because A/B testing (also called split testing) can improve your effectiveness, and I don’t know about you, but I like when my efforts pay off.

What is A/B testing?

In case split testing is a novelty to you, let’s start with a simple definition:

Split testing is about comparing two variants of “something” to identify which one performs better.

I am purposely using the word “something” as you can test numerous things like your website design or your cold email templates, you can AB test pretty much anything that comes to your mind!

The AB testing framework is nothing new. Here’s an interesting fact (if you are into history). The A/B testing framework dates back 100 years! It was used in agriculture and medicine.

In the 1960s and 1970s, the concept was adopted by marketers to evaluate direct response campaigns. Clearly, it works since it’s still in use today.

How do A/B tests work?

It’s fairly straightforward how A/B tests work. First, you need to decide what element you would like to test, and how many variants of that element you want to use.

Just because it’s called A/B testing doesn’t mean you can only use two variants.

Select your testing sample. Let’s say you would like to test an email subject line, and you have 5,000 subscribers or prospects that you want to email.

You can decide to test your variants on 10% of your total subscribers meaning that 90% of them will receive the winning variant.

Image: AB testing at Growbots

250 people will receive variant A while the other 250 will receive variant B. You should define the criteria for your winning variant. Is it an open rate, a click-through rate or a response rate? It depends on what you are testing, if it’s a subject line, then you’ll want to focus on the open rate.

You have to decide on the A/B test length. I know that you would like me to tell you how long it should last, but unfortunately… it depends.

In the subject line scenario, you probably don’t want to leave it for too long because then the sending time might impact the open rate, and the results of the test will be biased.

I usually run the test for around 2 hours before deciding on the winning variant (I am referring to the subject line specifically).

What if your test results are inconclusive? Then it might indicate that the subject line doesn’t have much impact and that you should consider testing other elements, go for something bigger.

“I thought that A/B testing was only good for marketing”

Well, think again buddy! Split testing can benefit both sales and marketing. Although sales and marketing might be A/B testing different things. So if you’re in sales and you don’t use split testing then you are missing out.

Image: split testing only good for marketers? Nah…

If you don’t know who your customer is, don’t bother with split testing

This should be obvious to you, but if you don’t know who your customer is, then running A/B tests is pointless. You won’t learn anything new apart from the fact that certain percentage of people who received your email prefer subject line A to subject line B. But who cares if they are not even in your target.

Results from your A/B tests will only benefit you if you run tests on prospects who are in your target. The purpose of split testing is to learn something new about your audience, not any audience.

If you need more information about creating an ideal customer profile then check out this article.

So what can I A/B test – here are a few AB testing examples:

1. Subject lines.

Because it all starts with your email being opened.

And it won’t happen if your subject line is not appealing to your prospects. However, what’s “appealing” to one person, is not so appealing to another. This is why it’s worth running a few tests, after a while, you might come up with a magic formula. A subject line that works pretty much every time.

You think it’s impossible?

Image: 80% open rate? Say what?!

We’ve managed to create an effective subject line formula after sending hundreds of thousands of campaigns. Now on average, we get open rates of 80% and so can you. Check it out.

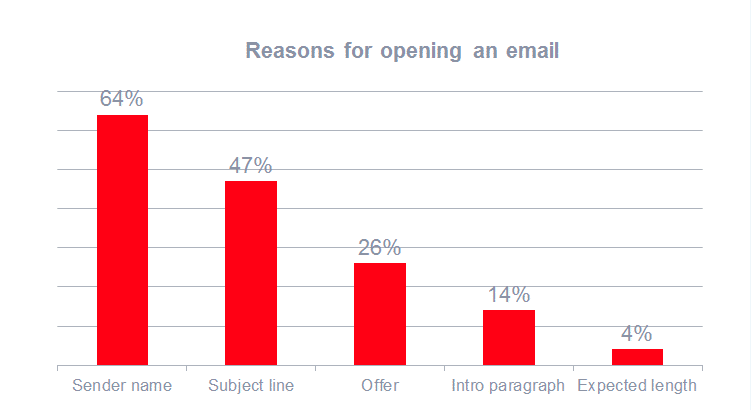

2. Sender’s name

Another potential A/B testing example is the sender’s name. You might think it’s trivial but familiarity is a key factor here. 64% of people say that they open emails based on who they’re from.

Source: Superoffice

“If the “from” name doesn’t sound like it’s from someone you want to hear from, it doesn’t matter what the subject line is,” says Copy Hacker.

Try including your name + your company’s name in the sender field, for example, Kasia at Growbots. Personalised “from” fields usually perform better than those which only include a company’s name.

You might try using your CEO’s name, to see how it performs, especially if you’re emailing someone on a similar level.

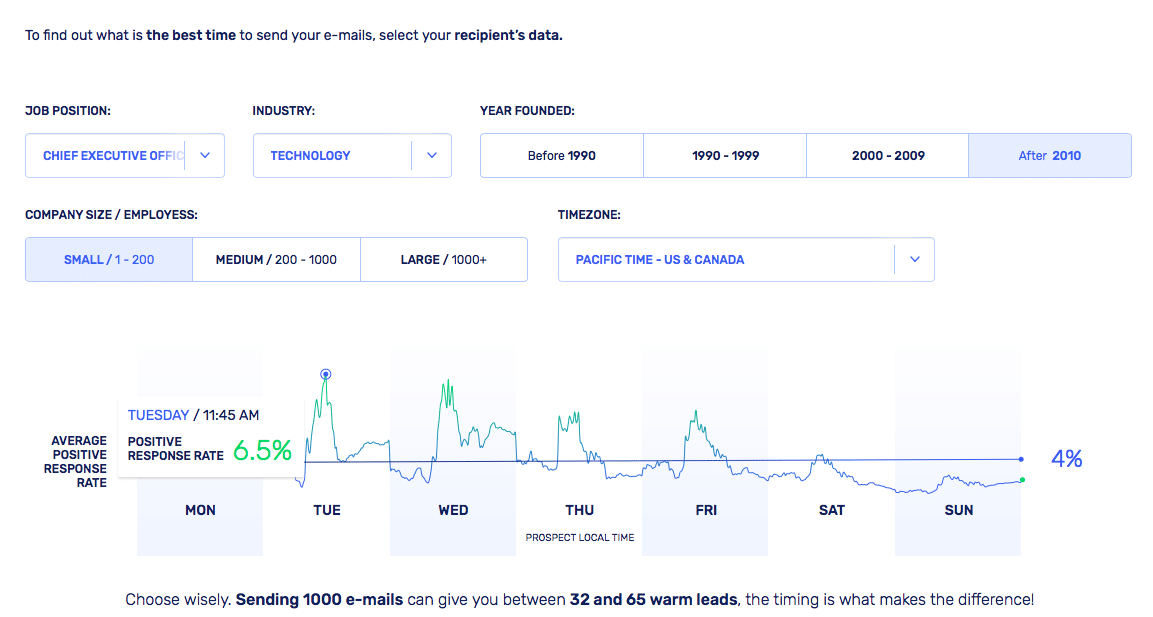

3. Sending time and date

Now, this is something definitely worth exploring; it will require quite a lot of testing. You can check two things: how people react to an email sent at a certain time (of the day) and day of the week.

There are hundreds of statistics that I could include in here which I will not do (sorry). I won’t because each source says something else, plus everyone is different.

Some people prefer to read emails in the morning, some in the evening. Some check their emails daily while other won’t even look at their work emails at weekends (like myself, yes I said it!).

Don’t be disappointed, because I have a great tool that will help you figure out the best time to send your emails. Here it is.

Checking what works for YOU is always the best idea.

Also, after you run a few A/B tests and get your results, you might want to segment your prospects based on their preferred email send time to maximise your open rate and response rate.

4. The content and the length of your email

You probably know too well how important email content/messaging is. The key to sending effective cold emails is to include messaging that resonates well with your audience. Any email that doesn’t, will be perceived as spam.

You can test your tone of voice, opening lines, include graphics.

According to Hubspot emails that trigger emotions either positive or negative result in 10 to 15% more responses than neutral emails.

Whatever you write, make sure it’s relevant to your prospects.

In terms of email length, since it’s a cold email the shorter it is, the better. On average we keep our emails to 70 words. The length of your email will probably hugely depend on the complexity of your product/service.

If you are selling a product that doesn’t require a lot of investment or commitment then short copy might be better.

However, if it does, then you might want to go for a longer copy. If you are not entirely sure in which category your product falls then yes you’ve guessed – just A/B test it.

Source: Marketing Experiments

5. Your target group

Another A/B testing example can be your target group. It’s especially useful if you are just starting out and you are not entirely sure who to target with your product, or you want to test a new target audience.

Just choose a smaller sample initially to evaluate their reaction and to minimise potential damage.

AB testing best practices

There are a few rules that you should follow to make the most of your A/B tests.

1. Remember to test one element at a time

The point of split testing is to identify which element of your campaign has a positive impact on your performance. If you test more than one element then how are you supposed to figure out what impacted your conversion rate?

You don’t have to limit yourself to just two variants, you can do multivariate testing, just take your sample size into consideration. If your sample is small then there is no point doing more than 2 variants as it will jeopardize the validity of your test.

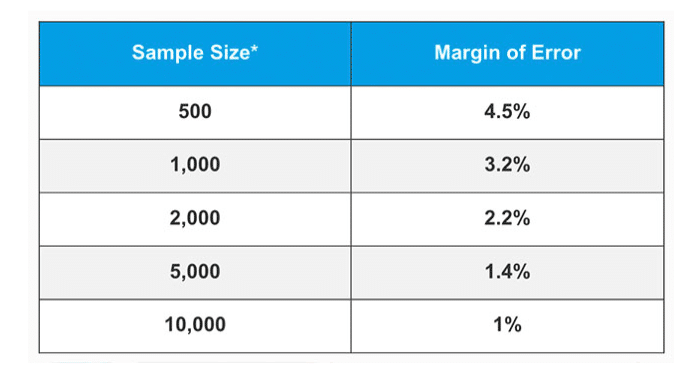

2. Select a decent sample size

Another AB testing best practice to follow is selecting the right sample size. Choosing an appropriate sample size for your A/B test will decrease your margin of error.

You have to be aware that you always operate within a margin of error while doing split testing, but the larger your sample, the smaller the margin of error. Have a look at the table below.

Source: Business LinkedIn

Sample size refers to the variant size, meaning that there should be 500 contacts in variant A and 500 contacts in variant B.

How to ensure that results of your split test are statistically relevant? Check out this calculator.

3. Do not end your A/B test too early

How long should you run your A/B test for? Until you achieve statistical significance of at least 95%.

Remember to run your test for a full week to eliminate seasonality. For example, if you start your A/B test on a Thursday then do not end it end it on a Monday. Wait until next Thursday.

Do you have any A/B testing best practices that you would like to share? I’ll happily add them to the article, just let me know in the comments.

What A/B testing tools can you use?

We’ve covered a lot about split testing, but you cannot run an A/B test without an email automation software. What A/B testing tools are out there? There are a few…

Growbots – of course, it’s my number one (and not because I work there). Growbots lets you quickly and easily test different elements of your campaign, like your subject line, email templates, sender fields and of course email sending times.

Our actionable insights will help you identify your best performing campaigns and optimise your results. On top of that, we have the best Customer Sucess Team that you could ever ask for! Their expertise in outbound sales is first class.

MailChimp – I loved using MailChimp! It’s definitely a must-have marketing tool. Just bear in mind that MailChimp cannot be used for outbound sales. You can only email your subscribers.

MailChimp lets you build up to 3 variations on each test, you can test different content, send times, etc. The winning variant will be sent automatically to your remaining subscribers.

MailTag – is a Chrome browser extension for your Gmail that enables you to easily track, schedule, and automate your emails. Their simple UI uses smart email tracking to enable you to receive real-time alerts right on your desktop as soon as your emails are read.

Mixmax – my life has changed a little since I’ve started using Mixmax (for the better). You can send A/B tests from your Gmail, it offers similar A/B testing options as the tools mentioned above.

You can easily track your performance and share your results across the team. It’s super intuitive to use. It also tells you instantly what the best time to send your email is, which is AWESOME!

If you commit to A/B testing it will eventually improve your bottom line. You’ll be able to identify what works and eliminate practices that do not bring anticipated results. Making data-backed decisions is important to ensure continuous business growth.

Just remember one thing, keep split testing as what worked in the past might not necessarily work in the future.

Happy AB testing, let us know how it went!